In the age of Big Data, when Data Scientist has been named the “sexiest job of the 21st century”, perhaps it’s only natural that predictive analytics is beginning to replace the super-powered-laser as Hollywood villains’ evil weapon of choice. After all, the fundamental science behind each is a mystery to most people who have not studied them directly, and analytics is certainly more present in the public eye today.

I recall watching Captain America: Winter Soldier with my husband and (poorly) repressing my amusement at the characters’ reverent use of the word “algorithm”, as if that one word by itself spelled certain doom.

But what is an algorithm, really? Is it deserving of unequivocal fear?

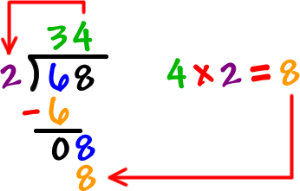

Elementary school children learn and use simple mathematical algorithms, such as long division.

Broadly speaking, an algorithm is a sequence of steps used to get something done. That definition could encompass everyday things like recipes and knitting patterns, as well as long division and neural networks. With such a broad range of algorithms, the word alone shouldn't immediately provoke fear.

But I hear you. Nobody is bragging about the new lemon ricotta pancake algorithm they tried over the weekend. The word "algorithm" typically applies to mathematical problem solving. But even mathematical algorithms don't have to be mysteriously complex processes that only data scientists can understand. Elementary school children learn and use simple mathematical algorithms, such as long division.

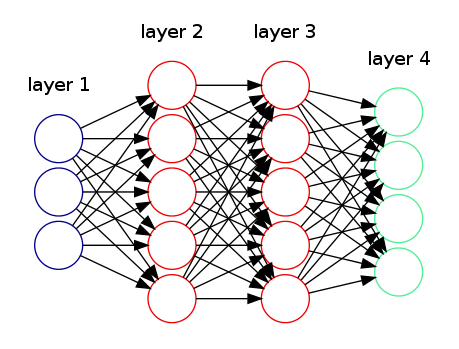

Complex algorithms could still theoretically be computed by hand, we just have machines perform them so we don't have to do millions of calculations on paper a la the human computers in Hidden Figures. So why does this term inspire equal parts awe and eyes-glazing-over?

As data science has advanced, so have the complexity and penetration of its algorithms. Statistical algorithms are being used to predict everything from what shoes you might want to buy on Amazon, to whether or not you're a key swing voter and what messaging you need to hear to vote for a given candidate.

Fans of House of Cards may recall a colorful character whose ... um... creative process ... my husband will never let me live down:

"All that is necessary for evil to triumph is for good men to do nothing."

Some of these models, like the ones developed by Aidan MacAllan for the corrupt Underwood campaign, can have terrible (intended or unintended) consequences. Think predatory lending practices, or poorly designed employee performance evaluation models. In fact, data scientist Cathy O'Neil details many examples of such models in her recent book Weapons of Math Destruction. Although this book leans a little doom-and-gloom for my tastes, O'Neil does point out some common characteristics of what does make an algorithm "evil". As we've often heard before, "all that is necessary for evil to triumph is for good men to do nothing", and that is certainly the case here.

One major factor in shady models is their lack of transparency to the public and/or the people they impact. Most companies consider their models to be intellectual property, and so they're unlikely to disclose the exact variables and coefficients used by the model to come to a particular solution. This lack of transparency is exacerbated by the general fear of algorithms we've already discussed: many people are intimidated by the very idea of statistics, and so they are reluctant to ask questions and unlikely to persist if given a hand-waving, convoluted answer. In essence, we do nothing to understand these models better, and allow evil to triumph.

Another major factor is the god-like status that analysts and companies give to their own models. Whether due to arrogance or laziness, some analysts treat models as infallible, not to be questioned. Unfortunately this usually means they are ill-equipped to explain the models because they haven't spent much time thinking about them from an outsider's perspective. Furthermore, they're less likely (according to O'Neil) to thoroughly analyze the model's performance after it's put into production. They may measure whether profits increased as expected (although even this is shockingly rare according to some industry articles I've read), but they probably aren't checking to see whether profits could have increased further with a different decision. Once the model is in place, the analysts do nothing and evil triumphs.

"All models are wrong, but some are useful."

Models are never infallible. We're often given the impression that really smart people program computers such that the ideal answer just emerges on its own like a butterfly from a chrysalis, but that's not even remotely the case. Human beings make decisions about what variables to allow the model to consider, and that alone can lead to oversight or implicit bias in the model. A former classmate reminded me today of a great quote by statistician George Box: "all models are wrong, but some are useful." Even the best model will occasionally predict the wrong outcome. Sometimes Amazon will advertise $40,000 watches to me when I'm logged in. Historically well-performing election predictions sometimes confidently back a losing candidate. The job of the analyst is to choose the model that gets it right most frequently, or earns the most money, or does the least harm. The job of everyone else in the age of Big Data is to be a savvy consumer, unafraid to ask questions.

So don’t let the unknown become frightening, and don’t let the word “algorithm” end the conversation. Ask questions, especially when models hit closer to home, such as the workplace and your child's school district:

- What factors are important? (Age, sex, income, etc)

- How are customized factors defined? (e.g. "Performance Rating")

- How precise are the model's predictions, vs. how precisely decisions are being used? (does the model predict within +/- 5 points but you're not given +/- 5 points' benefit of the doubt?)

These are all questions that decision makers should be able to answer in relatively simple terms when speaking generally about a model, and that they would need to understand in order to describe why an individual decision was made (e.g. why you're not getting a raise this year). You may be surprised by how simple these foreboding models actually are. If, however, the people in power can't answer those questions, they're giving models a god-like status, just like Arnim Zola and Natasha Romanoff did. And that's certainly something to fear.