Cloud Computing and Large Scale Data Architectures

This article is a great, short read (by the excellent Buck Woody, whom I’ve had the fortune to meet at a few conferences) that leads me to two points that I hold close to my heart regarding analytics. As I’m four weeks into my degree, I hope you’ll forgive me if I’m not solid on some of the definitions yet.

1. Predictive analytics isn’t a crystal ball.

You’re never going to be right 100% of the time. I think when people see things like Nate Silver predicting the game incorrectly, they want to make sweeping generalizations about how this proves predictive analytics is all made up and we should just go back to the nice, comfortable world of 100% human judgment.

The problem with this is that there’s a huge difference between predicting whether or not a single event will occur (such as the winner of a specific sports game or election, which I believe is technically forecasting) and predicting the average outcome of a repeated event (such as how much the average customer will buy if shown a certain ad, which I think is predictive modeling). We might be wrong just as often in both events, but one has a bigger impact and is easier to point fingers at. Personally, I’d rather do the latter.

2. Analytics is far from being an out-of-the-box solution.

Yes, there are and will continue to be solutions popping up to make analytics easier for untrained (and trained) professionals. But the most important problems cannot be reduced to computer algorithms with minimal expert input by the user. If they could, my program wouldn’t have such amazing employment statistics.

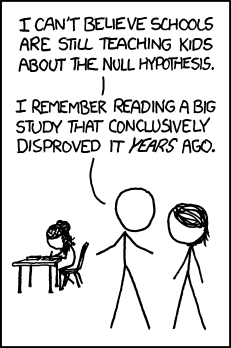

You can plug a data set and some variables into a program, but if you don’t know what you’re doing you will never have a clue that your metaphorical Siberian meteor is much bigger than you think. At least the JPL researcher knew the weaknesses of his model - do untrained dabblers know their model outcomes aren’t gospel?

The skill in analytics isn’t for using the software and knowing the secret keystrokes to make models “go”, it’s knowing how your data is structured, how to detect, diagnose and treat issues, and estimating how wrong you might be despite your best efforts.

Just a few of many reasons why I love analytics.